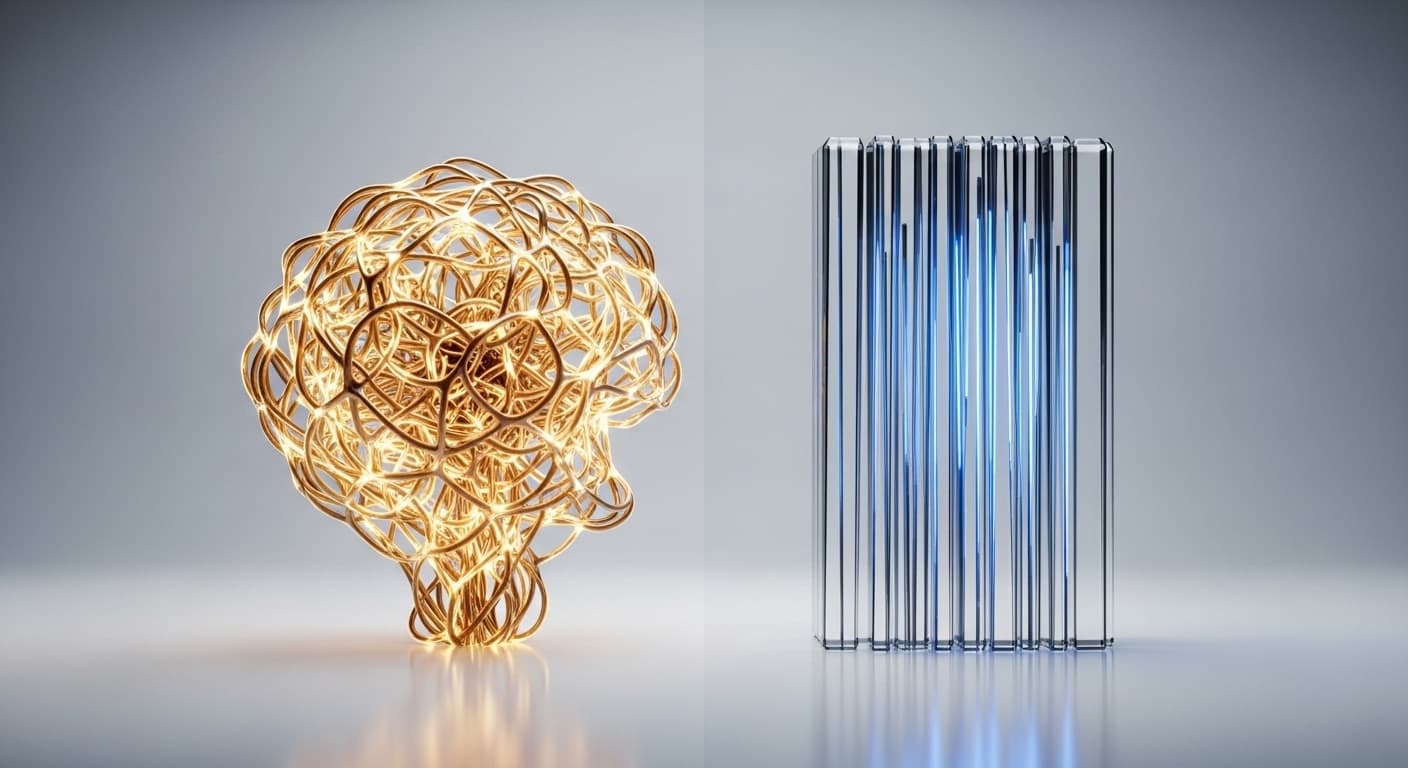

AI Brain vs. Human Mind: A Guide to How LLMs Really Work

Have you ever wondered if the AI you're chatting with thinks like you do? We see them producing poems, writing code, and answering complex questions, and it's easy to assume their internal world is a lot like ours. As it turns out, the way a Large Language Model (LLM) "thinks" is fundamentally different from a human mind.

This guide will take you on a journey into the core differences between human and AI cognition. We'll explore six key areas where our paths diverge, breaking down complex processes into simple, digestible explanations. By the end, you'll have a much clearer mental model for what's happening under the hood of an LLM.

1. Learning: Lived Experience vs. Pure Mathematics

The way we absorb information is the foundation of our intelligence. Both you and an AI learn, but the process couldn't be more different.

How You Learn 🧠

Your brain is incredibly dynamic. It constantly rewires itself through a process called neuroplasticity. Think of your brain as a dense forest. Every time you learn something new—like riding a bike or memorizing a new command—you forge a new path. The more you use that information, the wider and clearer that path becomes.

Neuroplasticity is the brain's ability to be easily shaped or molded. It occurs due to learning, experience, and memory formation. New experiences cause new neural pathways to strengthen, while pathways used infrequently become weak and eventually die off in a process called synaptic pruning.

This is why you can learn a powerful lesson from a single experience, like touching a hot stove once, and remember it for a lifetime. Your brain physically changes to store that memory. It's an ongoing, adaptive process that happens with every new interaction with the world.

How AI Learns 🤖

An AI, like an LLM, learns through a much more mathematical process during a distinct "training" phase. It's fed a colossal amount of text and data, and its learning happens through an algorithm called backpropagation.

Imagine the AI is taking a giant, multiple-choice test with billions of questions (e.g., "what word comes after 'the cat sat on the...'?"). Initially, it guesses randomly. When it gets the answer ("mat"), it's told how wrong its other guesses were. Backpropagation is the process of going backward and adjusting millions of internal "knobs," or weights, ever so slightly, so the next time it's a little less wrong. Repeat this millions of times, and the AI becomes incredibly good at predicting the next word in a sequence.

LLM Pruning: Similar to the brain's synaptic pruning, LLM pruning is a process that simplifies a large model by selectively removing less important parts. This makes the model smaller and faster without significantly hurting its performance.

Unlike you, an LLM's core knowledge is "frozen" after its training. It isn't continuously learning from each new, individual conversation it has.

2. Processing: Weaving Meaning vs. Calculating Probability

Once knowledge is acquired, how is it used in the moment? Here, we see another fundamental split between interpreting meaning and performing math.

How You Process Information 🧠

Your brain is a master of parallel processing. Billions of your neurons can fire simultaneously, allowing you to handle multiple streams of information at once. When you read the word "apple," you don't just see letters. You might instantly:

- Picture a red or green fruit.

- Remember its taste and texture.

- Think of Apple the company.

- Recall the proverb "an apple a day..."

Your brain connects this single word to a vast, interconnected web of meaning, context, and memories almost instantly.

How AI Processes Information 🤖

An AI processes text in a much more linear, or sequential, fashion. It uses a method called tokenization to break down a sentence into pieces called tokens, which can be words or parts of words.

For an AI, "apple" is just a token. Based on its training, this token has a high mathematical probability of being associated with other tokens like "pie," "tree," or "iPhone." To figure out which words are most relevant in a given sentence, it uses a powerful mechanism called "attention."

Attention allows a model to weigh the importance of different words (tokens) in an input sequence when generating an output. This helps the model better understand context by focusing on the most relevant parts of the text.

This is an incredibly sophisticated form of pattern matching, but it lacks the rich, multi-sensory understanding that you have.

3. Memory: A Vast Library vs. A Temporary Whiteboard

Our ability to store and recall information is crucial. Here again, our approaches are worlds apart.

Your Memory System 🧠

Human memory isn't a single thing. Psychologists often refer to the multi-store model of memory, which includes distinct systems working together:

- Sensory Memory: A fleeting snapshot (less than 2 seconds) of what you just saw or heard.

- Working Memory: Your mental "scratchpad." It holds what you're actively thinking about (around 7 items for about 15-30 seconds).

- Long-Term Memory: Your vast, seemingly limitless storage for facts, experiences, and skills, which can last a lifetime.

Critically, your memory is associative. A smell can instantly trigger a childhood memory, or a song can transport you back to a specific moment. You learn and remember the relationships between unrelated items.

AI's Memory System 🤖

An AI's memory structure is much simpler. You can think of it in two main parts:

- Long-Term Memory: This is the knowledge baked into its weights during training. It's vast but fixed.

- Working Memory: For an LLM, this is its context window.

Think of the context window as a whiteboard. It's the space where your current conversation is written down. The AI can "see" everything on that whiteboard to inform its next response. However, this whiteboard has a fixed size. Once the conversation gets too long, the earliest parts get erased. The AI literally forgets what you talked about at the beginning of the conversation.

This limitation becomes a real problem when building practical AI systems. Imagine an AI trying to run a business for weeks or months—it would eventually forget crucial decisions it made early on. This is exactly the challenge I analyzed in Anthropic's Project Vend experiment, where I explored how a single long conversation trying to manage everything led to compounding errors and inconsistent decisions—and proposed architectural solutions to address these issues.

4. Reasoning: Applying Logic vs. Predicting Patterns

Are you a purely logical being? Is the AI truly reasoning with you? Let's look at the difference between finding an answer and predicting what an answer should look like.

How You Reason 🧠

Nobel prize-winning psychologist Daniel Kahneman described our thinking as a tale of two systems:

- System 1 is fast, intuitive, and automatic. It’s your gut reaction, like recognizing a face or answering "2+2".

- System 2 is slow, deliberate, and conscious. It's the logical reasoning you use to solve a complex math problem or weigh the pros and cons of a big decision.

When you reason, you are consciously engaging System 2, working through a problem step-by-step and applying rules of logic to arrive at a conclusion.

How AI "Reasons" 🤖

An LLM is trained on the output of human System 2 thinking—all the logical, well-structured text on the internet. It has learned what reasoning looks like.

When you ask an AI to solve a problem, it isn't logically thinking it through like you do. Instead, it's generating a sequence of tokens that is statistically the most plausible "reasoning-like" text to follow your prompt. This is why it can sometimes fail at very simple logic puzzles that a child could solve. It doesn't inherently grasp the rules of logic, only the textual patterns associated with it.

5. Errors: Filling Gaps vs. Mathematical Miscues

Both humans and AI can make things up. But the reasons why they do it reveal a key difference in their nature.

Human Error: Confabulation 🧠

When humans create false memories, it's often a phenomenon called confabulation.

Confabulation is a memory error involving the production of fabricated, distorted, or misinterpreted memories about oneself or the world, without the conscious intention to deceive.

This isn't intentional lying. It's the brain's good-faith attempt to fill in missing gaps in a memory to create a complete and coherent story. The person genuinely believes their fabricated memory is true. It’s a bug in our memory retrieval system.

AI Error: Hallucination 🤖

When an AI makes something up, it's called a hallucination. (Though some argue confabulation is a better term).

This happens when the model, in its mathematical quest to find the most probable next token, generates text that is nonsensical or factually incorrect. It isn't trying to fill a memory gap; it's simply following its programming, which can sometimes lead it to produce very confident-sounding falsehoods. It's a bug in its text-generation system.

6. Embodiment: A Lived Reality vs. A Read Reality

Finally, perhaps the biggest difference is our connection to the physical world.

Your Embodied Mind 🧠

You are an embodied being. Your thoughts and understanding are deeply shaped by the fact that you have a body and interact with the physical world. This is known as embodied cognition.

Your understanding of "heavy" comes from the experience of lifting things. Your understanding of "hot" comes from feeling warmth. This direct, first-hand experience gives you a foundation of common sense that is difficult to articulate but easy to use.

The Disembodied AI 🤖

An LLM is completely disembodied. It exists only as software on a server. It has never touched an object, felt the sun, or tasted food. Its entire knowledge of the physical world is second-hand, learned by reading descriptions written by humans who have.

This is why, despite its vast repository of facts, an AI can lack the most basic common sense about how the physical world operates.

Conclusion: Two Intelligences, One Future

Understanding these fundamental differences between human and AI cognition isn't just academic—it's the key to unlocking AI's true potential. When we grasp that an LLM operates through mathematical pattern-matching rather than conscious understanding, we can better appreciate both what it excels at and where it falls short.

This comparison reveals why AI is extraordinary at tasks like processing vast amounts of information, generating creative content, and recognizing complex patterns across domains. Yet it also explains why AI struggles with common sense, physical reasoning, and tasks that require genuine understanding of lived experience.

You bring meaning, context, and embodied comprehension to every interaction. AI offers incredible speed, computational power, and access to patterns across human knowledge that no individual could ever master. The real magic happens when we stop asking whether AI thinks like us, and start leveraging these complementary strengths—using AI as a powerful thinking partner while contributing the uniquely human elements of wisdom, judgment, and genuine understanding.

Inspiration: The core ideas for this article were inspired by this excellent video that explores similar concepts about AI and human cognition: AI vs Human Thinking: How Large Language Models Really Work.